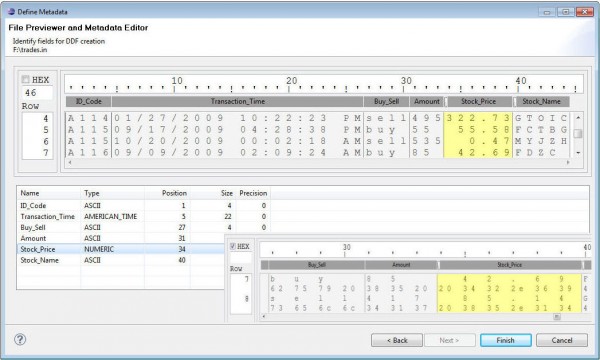

Using the Metadata Discovery Wizard

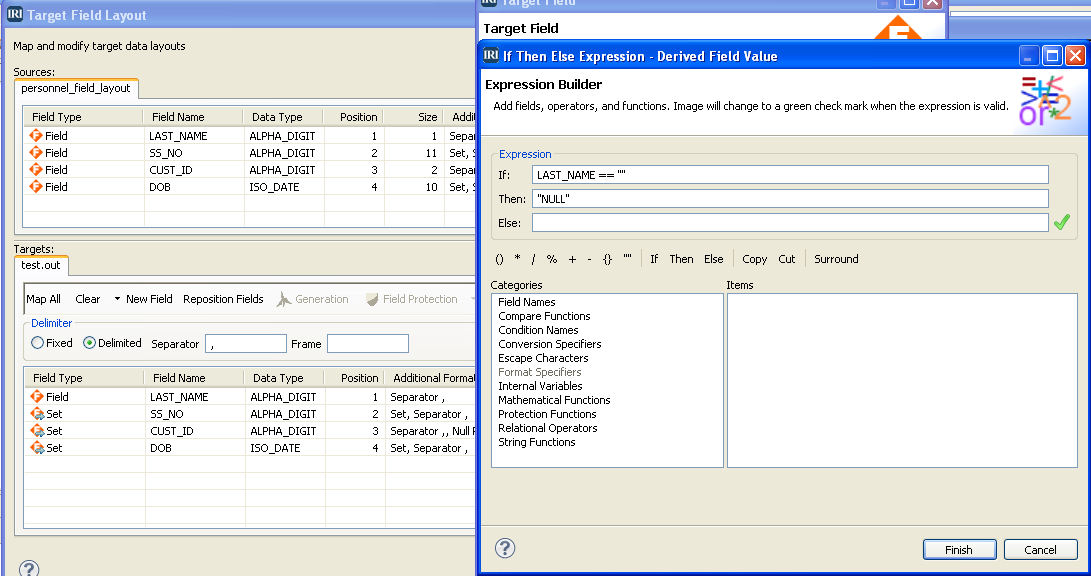

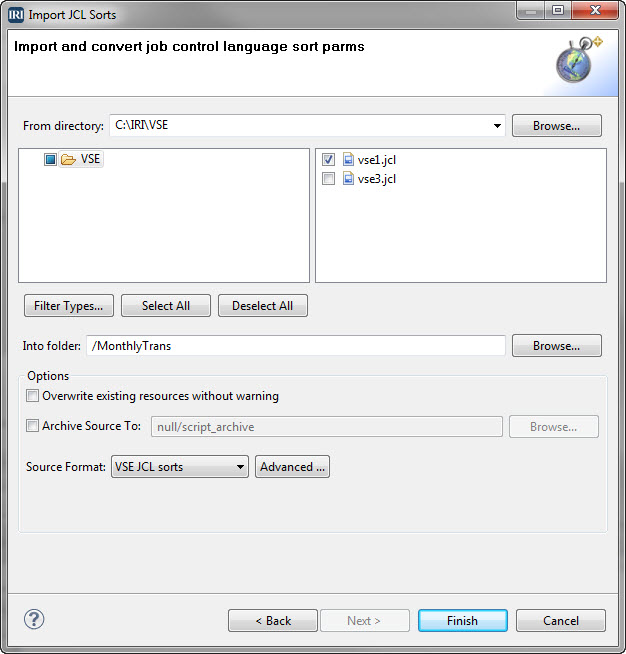

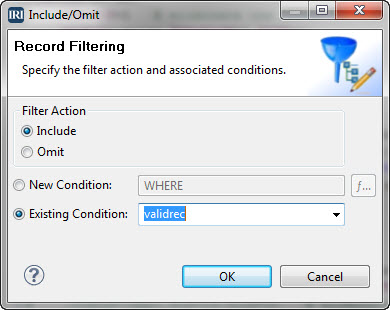

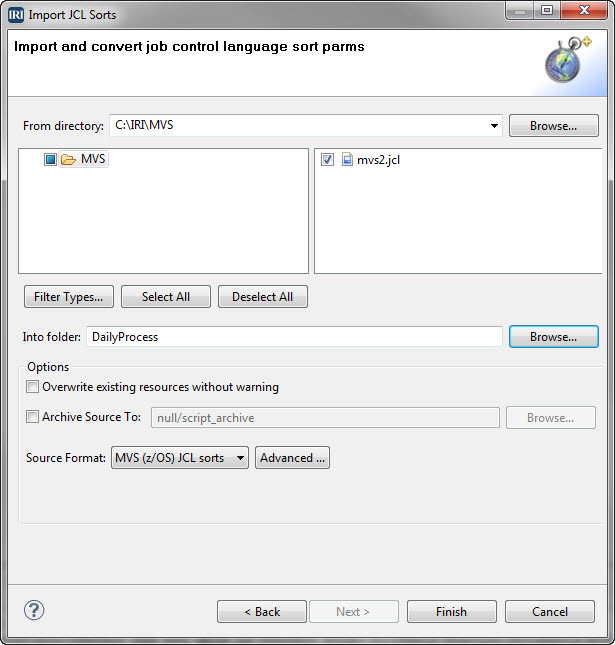

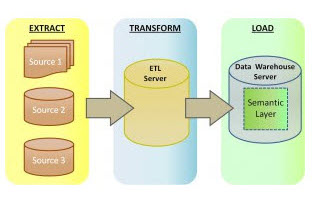

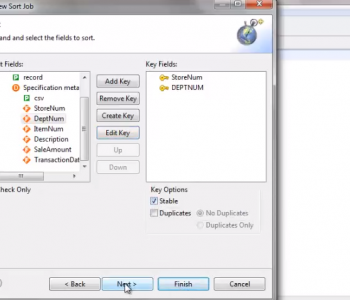

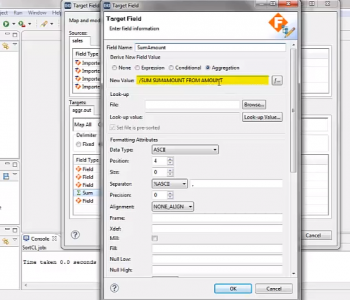

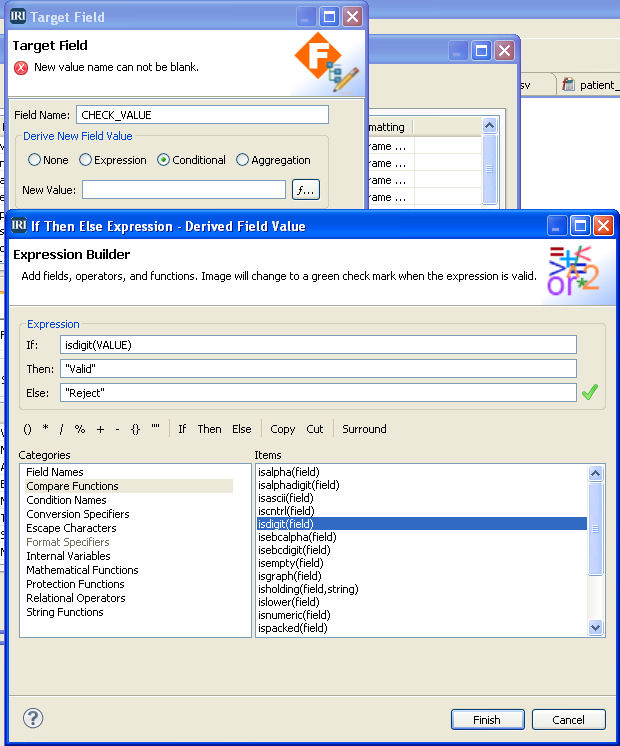

IRI’s data management tools share a familiar and self-documenting metadata language called SortCL. All these tools — including CoSort, FieldShield, NextForm, and RowGen — require data definition file (DDF) layouts with /FIELD specifications for each data source so you can map your data and manage your metadata. Read More