Informatica Pushdown Optimization with CoSort

Big data integration activities can happen outside the database in an extract, transform, load (ETL) environment, or inside the database in ELT:

http://www.iri.com/blog/data-transformation2/etl-vs-elt-we-posit-you-judge/

One example of an ELT operation would be Informatica’s Pushdown Optimization option, in which users transform data in a relational database like Oracle, or in Teradata. According to Informatica, this approach “allows the IT systems and their operators to respond to changing demands and peak processing needs.”

IRI and its customers believe that the work of big data transformation does not belong in databases designed for storage and retrieval. Transformations in the database layer can burden the database and the whole system, drawing resources from data storage maintenance, and slowing down queries.

Speed in any circumstance, whether an ETL or ELT operation, is always critical to the equation. The IRI CoSort engine, and the modern IRI Voracity platform which uses it (or Hadoop), both support the ETL paradigm. They optimize big data integration performance through superior transformation algorithms, task consolidation, and file system resource exploitation. For more information, see:

http://www.iri.com/solutions/data-integration

Such external operations are more efficient, affordable, and remove the burdens of data integration and staging from BI, database and legacy ETL tools, freeing them to do the jobs they do best instead. Teradata, Hadoop, in-memory and columnar databases, and ELT appliances are also much more expensive and complicated ‘first resort’ options than CoSort!

How Does this Work?

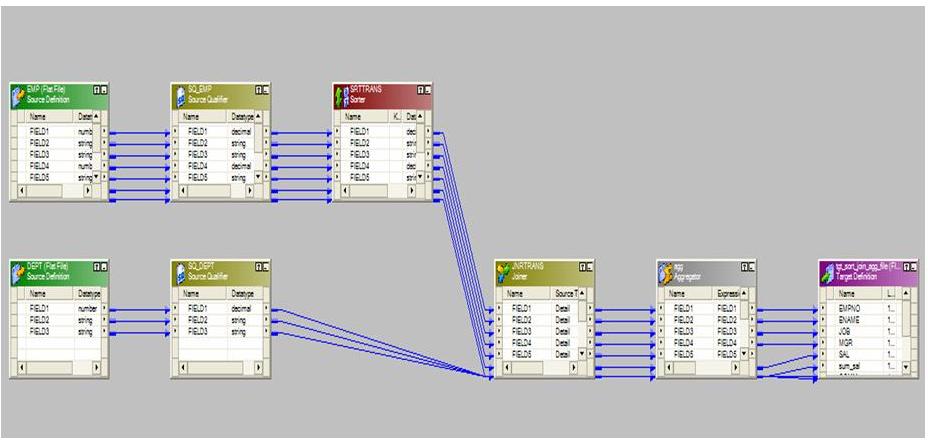

IRI is frequently approached by customers using platforms like Informatica PowerCenter who need to accelerate production jobs, where jobs like sorting, joining and aggregation are staged separately:

With CoSort, all data transformation tasks run a single job script and I/O pass. Those jobs can also include filtering and conversion, an extensive array of field-level protections, custom reformatting and reporting with math functions, special layouts, multiple targets, and so on.

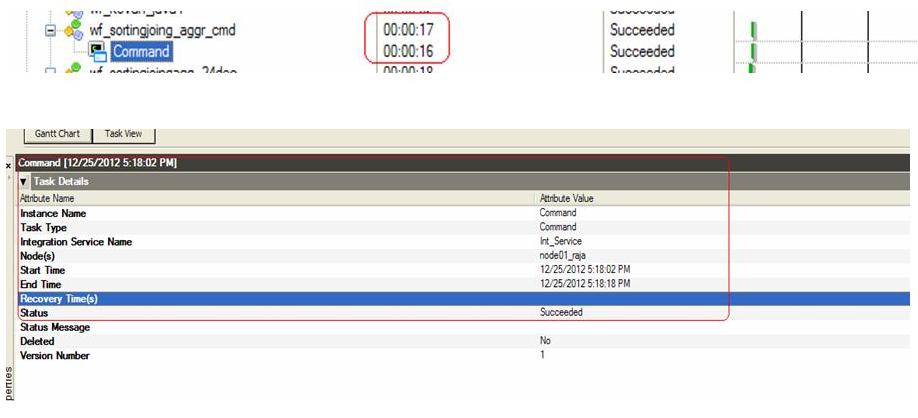

The difference CoSort data transformation makes can be seen even on a laptop. An independent consultant using PowerCenter 8.6 under Windows XP SP3 on an Intel® Core™ CPU M370 @2.4GHz with 3 GB of DDR3 memory defined sort, join, and aggregate stages for 100MB and 53MB flat files, and ran them all in 94 seconds. That same job ‘pushed out’ to a single CoSort Sort Control Language (SortCL) program in the file system produced the same results “from” Informatica in 16 seconds.

“Push out” optimization from Informatica to CoSort is straightforward:

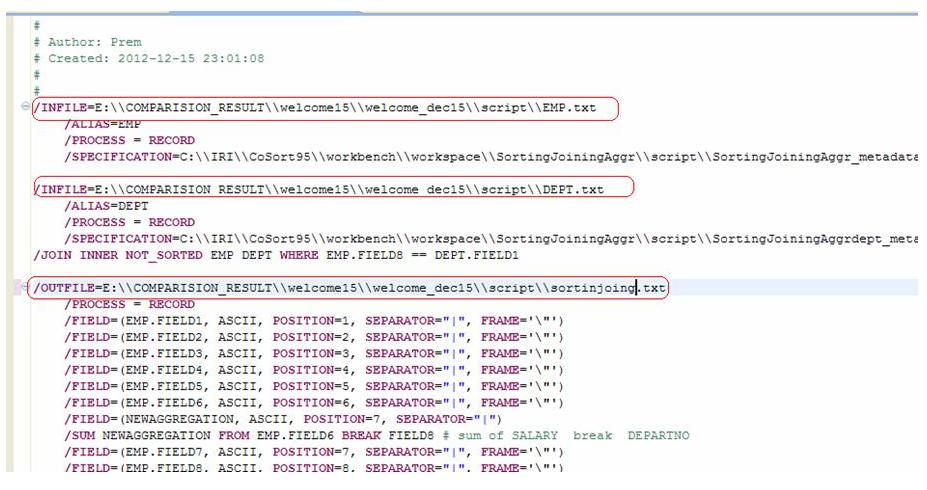

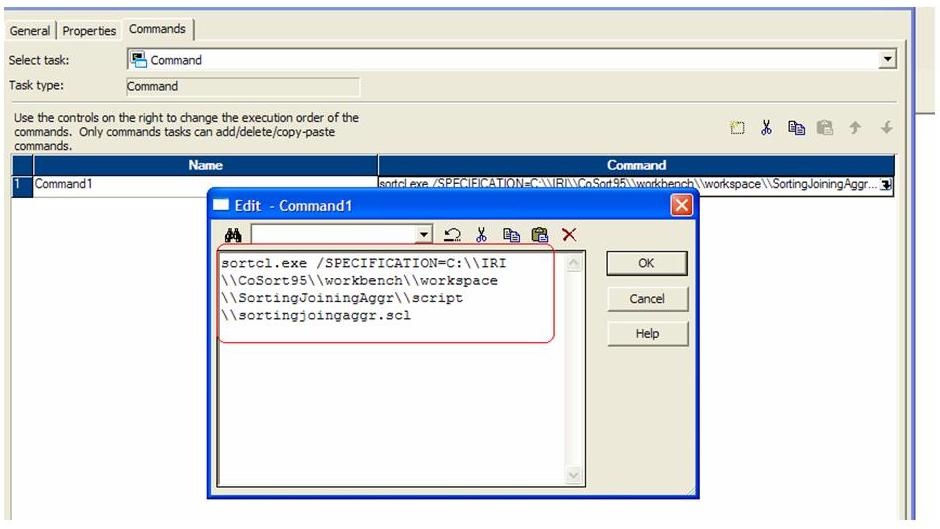

1. Create a simple SortCL language program like the one below in any text editor or new job wizard in the IRI Workbench GUI, built on Eclipse. The job runs on the command line ($sortcl /spec=script.scl) and can be called through the same PowerCenter “Command Task” that runs any .exe file.

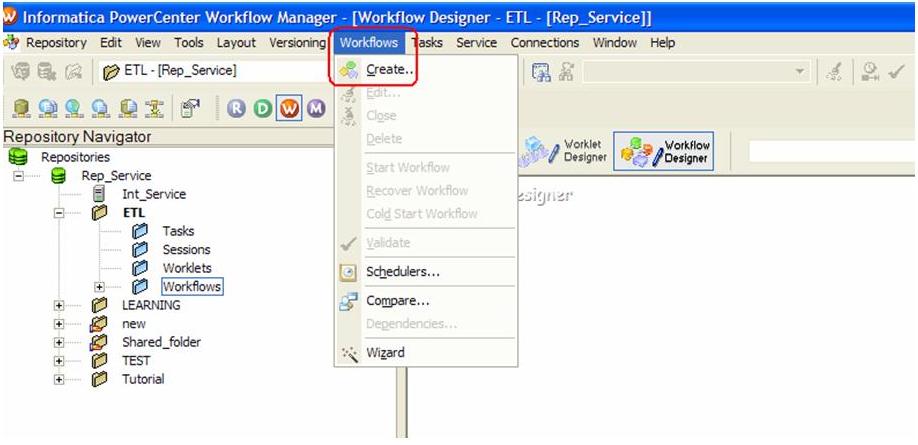

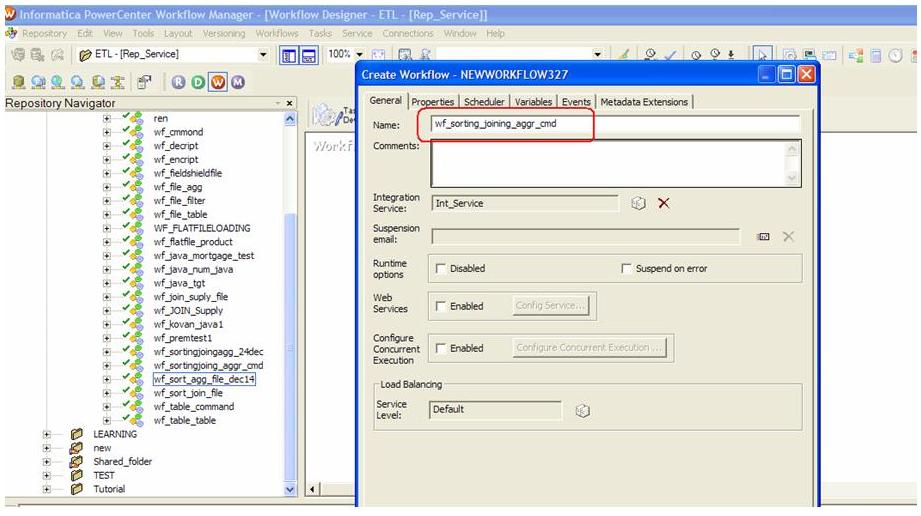

2. To create such a command task in PowerCenter, select “Workflow” from the upper menu and then select “create”:

3. Name the workflow. For example, wf_sorting_joining_aggr_cmd

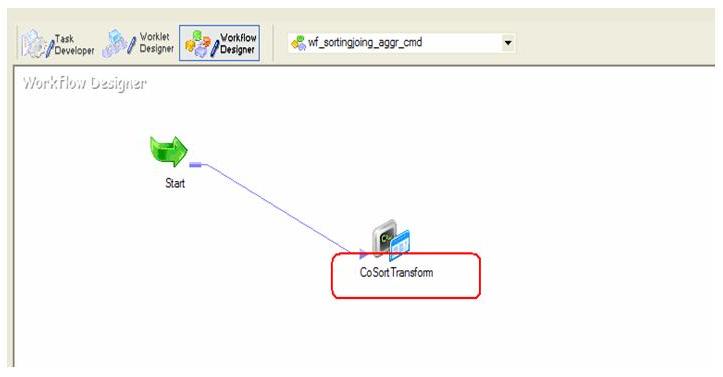

4. To display the command in the workflow designer, select command task from the upper tool bar and create the actual command that will run:

5. This task will call the SortCL job in the command task. The task itself can be edited to call any job; in this case the SortCL job script “sortjoinaggr.scl.”

6. The consultant’s last image shows the CoSort/SortCL job completed from Informatica in 16 seconds; while the original job in Informatica took 94:

Contact info@iri.com if you are interested in optimizing your ETL tools’ transformations through a simple call to CoSort. See this article if you’re interested in re-platforming Informatica ETL jobs to the same jobs in IRI Voracity jobs via AnalytiX DS technology to save time and money.

FYI, Voracity is IRI’s ‘total data management platform’ which uses CoSort or Hadoop engines (but the same metadata either way). Voracity combines:

- data discovery (DB & flat-file profiling, classification, ERDs, dark data)

- data integration (ETL, CDC, SCD, etc.),

- data migration (for databases, file formats, data types, etc.) and replication

- data governance (cleansing, masking, EMM, MDM, etc.)

- analytics (embedded reporting and data preparation)

all in the same Eclipse GUI. Voracity is also compatible with AnalytiX DS Mapping Manager and CATfx templates for managing ETL metadata stratification, creation, lineage, and conversion.

1 COMMENT

[…] CoSort is now a proven pushdown optimization option for Informatica, with significant time and/or cost savings over the official Oracle and Teradata options. For more information on the benchmark, and inquire about a free trial, see the blog article: Informatica Pushdown Optimization with CoSort. […]