Data Anonymization vs. Pseudonymization in DarkShield

In an age where data privacy is under the microscope, selecting the right strategy for protecting sensitive information is no longer optional—it’s mandatory. For developers, data officers, and AI teams, deciding between a data anonymization tool and pseudonymization isn’t just a technical choice—it’s a strategic one. IRI FieldShield and IRI DarkShield support both techniques, but which one fits your use case best?

This article breaks down the differences, strengths, and use cases of both anonymization and pseudonymization within DarkShield to help you choose the best approach for your compliance and security needs.

What is Data Anonymization?

Anonymization is the irreversible transformation of personal data so an individual can no longer be identified—directly or indirectly. Once anonymized, the data cannot be connected back to a person, even when combined with other datasets.

Within DarkShield, the data anonymization functions like blurring allow enterprises to completely scrub personally identifiable information (PII) from unstructured data sources—think EDI files, PDFs, images, and databases —while maintaining data utility for analytics and training AI models.

What is Pseudonymization?

Pseudonymization, on the other hand, is the reversible process of replacing private identifiers with fake identifiers or pseudonyms. It ensures that data can be re-linked to an individual, but only with access to additional information stored separately.

Pseudonymization is particularly useful for applications where re-identification is necessary under controlled conditions—such as in AI model training, where feedback loops are essential.

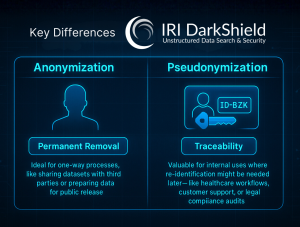

Key Differences in DarkShield

The IRI DarkShield data masking tool is engineered to support both techniques flexibly across a wide variety of data sources and silos. Here’s how the two approaches diverge in practice:

- Anonymization ensures permanent removal, so it’s ideal for one-way processes, like sharing datasets with third parties or preparing data for public release.

- Pseudonymization offers traceability, making it valuable for internal uses where re-identification might be needed later—like healthcare workflows, customer support, or legal compliance audits. This requires the use of a lookup set when creating the pseudonyms.

Use Case Scenarios: Which Should You Use?

When using DarkShield, choosing between anonymization and pseudonymization depends on your specific use case.

1. Healthcare AI Pipelines

When working with healthcare data, anonymization is typically the safer route, especially when HIPAA compliance is involved. Once data is de-identified using the data anonymization tool, it can be used for training machine learning models without risking exposure to PHI.

2. Fraud Detection and Risk Modeling

If your AI model requires tracking behavior over time, pseudonymization makes more sense. DarkShield can pseudonymize customer names or account numbers while preserving longitudinal data continuity for fraud detection systems.

3. GDPR and CCPA Compliance

Pseudonymization meets the data minimization principles in regulations like GDPR, as long as the mapping keys are securely stored. DarkShield enables organizations to stay compliant by facilitating secure key management.

4. Customer Service Chat Logs

Anonymization might be preferred when archiving large sets of customer interactions for NLP model training. DarkShield’s rule-based redaction and replacement options automate this at scale. See the full list of data masking functions here.

AI and Data Privacy: What’s at Stake?

AI models are only as ethical as the data they consume. With global scrutiny mounting around how companies collect, process, and use data, ensuring proper privacy frameworks is no longer negotiable.

The General Data Protection Regulation (GDPR), California Consumer Privacy Act (CCPA), and other mandates like Brazil’s LGPD have placed strict limitations on the handling of personal data. Using a robust data anonymization tool or pseudonymization within DarkShield helps organizations build defensible, responsible AI pipelines.

Integrating with Your Data Stack

IRI DarkShield integrates easily with multiple data environments, whether you’re pulling sensitive values from logs, Excel sheets, JSON files, images, or databases. As a data classification tool, it provides advanced search, match, and remediation functions, so developers can tailor masking operations to very specific PII patterns.

Moreover, DarkShield also connects with the IRI Voracity platform for textual ETL to join data in structured, semi-structured, and unstructured formats, making it an enterprise-grade solution that fits AI workflows as well as regulatory requirements.

Why This Matters for Your AI Pipeline

In the AI space, context and accuracy matter—but so does privacy. Applying the wrong strategy can not only taint model performance but also expose your organization to legal risks. The key lies in applying:

- Anonymization when the data will be analyzed in bulk, used for external sharing, or public-facing purposes.

- Pseudonymization when the analysis depends on patterns linked to individuals—without needing to expose their real identities.

By leveraging the flexibility in DarkShield, teams can apply a mix of techniques, starting with anonymization and layering in pseudonymization as required downstream.

FAQs

Q1: What is the difference between anonymization and pseudonymization under GDPR?

Anonymization removes any link between the data and the individual, while pseudonymization replaces identifiers with pseudonyms that can be reversed with a key. Under GDPR, anonymized data is outside the scope of the law, whereas pseudonymized data remains personal data.

Q2: Can DarkShield be used to anonymize PDF files and emails?

Yes. DarkShield supports structured, semi-structured, and unstructured data, including PDF documents, emails, XML, JSON, and log files. Its rules-based engine scans for PII and applies real-time masking.

Q3: Is pseudonymized data safer than encrypted data?

Not necessarily. While both protect data, encryption secures it through cryptographic methods, while pseudonymization allows for selective re-identification. Combining both techniques can provide layered security.

Q4: Do I need both anonymization and pseudonymization in my AI model workflow?

It depends on your use case. Many organizations use anonymization to protect sensitive information during model training and pseudonymization for validation and auditing purposes.

Final Thoughts

Navigating the world of data protection isn’t simple, especially when AI is involved. But with PII masking tools like IRI DarkShield, developers and data teams have a robust toolkit that supports both data anonymization and pseudonymization at scale.

Choosing between the two approaches isn’t just a technical decision—it’s a strategic one that reflects how your organization values transparency, compliance, and ethical AI practices. When you need to balance utility and security, DarkShield delivers the control, flexibility, and power to help you get it right.